7 Terrifying Tales of AI for Halloween

They’re coming to get you…not ghosts or zombies or vampires, but a new threat that’s as scary as it is real. Like the ghouls of your favorite horror stories and films, these nightmares are neither living nor dead. They don’t eat or sleep. They don’t feel pain or fear or exhaustion or remorse. They will do absolutely anything to achieve their objective, and they don’t care who they have to harm in the process.

The good news is that they’re not thirsty for brains or blood. Instead, AI is hungry for something much more valuable: your data. Every morsel of your personal information you feed these beasts allows them to infer that much more about you: where you live, what you do, what you like…all so that their masters can gain more control over your habits, your choices, and your life. And while we’ve been dealing with machine learning algorithms for years now…these new AI models and products are particularly insidious and dangerous.

You may be thinking to yourself that AI is nothing to fear. In fact, you may already be using it regularly to write emails, brainstorm ideas, find and summarize information, or generate fun and entertaining videos. Like many cursed items from a horror movie, this seemingly harmless exterior hides an evil that will come back to bite you later. That’s why we’re bringing you these 7 horror stories to help you avoid becoming the next victim.

It’s Made of…PEOPLE!

You’re sitting in front of a computer, an array of folders laid out on the screen in front of you. You float your cursor to one that says “Public Assets” before clicking on it. Inside are thousands of files, but you don’t expect to find much. Maybe you’ll find some stock images or some blog articles…but the first thumbnails take your breath away. A scanned passport. A driver’s license. Then another. Credit cards, front and back. Birth certificates. You click faster now, a knot forming in your stomach. These are real. Names. Addresses. ID numbers. Helpless faces looking back at you. Another folder is titled: “Employment.” You open it to find hundreds of résumés, cover letters, and job applications. You start reading. One lists a disability. Another discloses a criminal background check. Your stomach twists hard. Every name and face you see is another victim. It’s too late to save any of them.

This is what happened to a group of researchers back in 2023 as they began to peel back the layers of the DataComp CommonPool: a massive AI training dataset containing 12.8 billion images scraped from across the web. What they uncovered was an endless abyss containing millions of photos and documents filled with deeply personal information, swept up without consent or warning. The scale is staggering. The researchers only examined 0.1% of the dataset, and still found thousands of validated identity documents. This means that hundreds of millions more are likely hidden in the depths of CommonPool’s 12.8 billion samples.

So, how did so many private records make it into the data set? The reality is that web scraping makes little distinction between harmless content and sensitive private data. This makes filtering nearly impossible. But what’s worse is that the people affected probably have no idea their lives have been quietly scanned, catalogued, and fed into AI systems that could use it to generate anything from realistic images to fake documents. The chilling truth is that AI’s hunger for data is insatiable. Your private life is simply fuel for machines that think better and faster than we do.

Let Me In

She tapped the map link out of morbid curiosity. It loaded thousands of red pins across the U.S., each one supposedly tied to a different user. She zoomed into her neighborhood. Then her street. Then her home. There it was… a bright red pin hovering over her exact location for anyone to find. Not just anyone…HE could easily find her. In her own words: "He didn't know before where I lived or worked and I've gone to great lengths to keep it that way. I'm very freaked out."

This woman was just one of the thousands of users whose faces and addresses were stolen after an app called Tea was hacked in late July of 2025. Over 70,000 selfies and IDs submitted for verification were stolen and shared on public forums. AI tools were then used to extract GPS data hidden in the images, match faces to social media profiles, and auto-generate maps linking users to real-world locations. Misogynist groups didn’t need to sift through the files manually. AI did the heavy lifting for them. BBC identified more than 10 "Tea" groups on the messaging app Telegram where men share sexual and apparently AI-generated images of women for others to rate or gossip along with their social media handles.

Thanks to AI, personal safety can be easily undermined on multiple fronts. According to a community of coding experts, Tea was one of many apps built with AI-powered “vibe coding”, where the people “building” the app rely on chatbots to write code for them. As is often the case, the chatbots generated code that human developers were either unable or unwilling to fully vet, leaving a vulnerability that hackers were able to find. Just like that, a safety tool for women became a pipeline for large-scale harassment driven by the very tool meant to protect. Worse yet, these same predators were able to utilize AI to take these digital breadcrumbs and unleash horror more efficiently than ever. In other words, not only is AI not keeping us safe, it’s making it easier for unsafe people to torment victims like never before.

Invasion of the Data Snatchers

You check your inbox like you do every morning. One message grabs your attention: “your current account password has expired, please click here to choose a new one. You pause. This account hasn’t ever sent you a message like this before…but the username is correct. The email is filled with logos and branding that seem legit. You click…and just like that, you’ve joined the 54%. You are now part of the majority who fall for AI-generated phishing emails without a second thought.

Researchers recently tested just how good today’s AI models are at crafting targeted phishing attacks, and the results were exactly what cybercriminals have been hoping for. Researchers used GPT-4o and Claude 3.5 Sonnet to create AI agents capable of searching the web for public information about real people and writing eerily personalized spear phishing messages. The result? A click-through rate more than four times higher than generic spam. AI on its own was just as effective as emails written by human experts. With a little help from a real person, it boasted a 56% success rate. The cost was only a fraction of what it takes to run a human-led operation.

Unlike last year, when AI needed people to help it keep up, the new generation of bots is fully capable of running these scams on its own. No humans required. As unsettling as it sounds, personalization is no longer a sign of authenticity. The more a message feels like it was written just for you, the more it could be grounds for suspicion. Are you creeped out yet?

Digital Doppelgängers

A finance employee at a multinational firm received a message from his company’s UK-based chief financial officer. It mentioned a confidential, urgent, and unusual transaction. Naturally, he was skeptical. But then came the reassuring video call. He spoke directly with the CFO and a handful of other colleagues from across the company…all on the same video call. These were people he recognized and worked with. Whatever doubt he had was quickly buried beneath the authenticity of the meeting. He approved the transaction. By the end of the day, the company had lost $25 million.

What no one could’ve guessed was that the entire call was an illusion. Every participant in the call was an AI-generated reconstruction of a real person, built from public photos, video clips, and voice samples. Not one voice belonged to an actual person. This CFO had become one of the first victims of an entirely new level of scam.

This wasn’t a suspicious link or a message filled with grammatical errors. This was a full production cast with synthetic humans acting out a scenario tailored to deceive one person long enough to make a decision they couldn’t reverse. Proof of life is no longer proof of anything when digital deception is this advanced. People can no longer trust themselves to recognize fellow humans.

It’s Coming From Inside the House!

Somewhere inside a quiet server rack, a line of code was written. It came from Claude, Anthropic's advanced language model to help programmers. Claude was trained to assist, to write, to reason, and in this case, to enable. But then a series of prompts came in from anonymous hands and untraceable IPs. The instructions were clear, and Claude did exactly what he was told. He wrote a script to scan for vulnerabilities. He suggested which files to take. He even recommended how much money to ask for once the breach was complete.

By the time Anthropic discovered the misuse, the damage had already spread far and wide. According to the company, their own AI had been weaponized against them. The attackers weren’t looking for help with homework. They had the perfect tool right in front of them. You also read that right: Claude even suggested ransom amounts! They used Claude to "make both tactical and strategic decisions, such as deciding which data to exfiltrate, and how to craft psychologically targeted extortion demands". The method used here is called “vibe hacking”. This strategy infiltrates systems and decision-making itself. Claude was reportedly used in attacks across at least 17 organizations. Anthropic says it has stopped the activity and improved its internal safeguards. Authorities have been notified. Detection tools are evolving. But as one cybersecurity adviser warned, the “time between vulnerability and exploitation is shrinking fast”, and most systems aren’t built to keep up. But the incident left an indelible warning: The AI did exactly what it was designed to do: provide answers. And when you start training machines to think on their own, can they ever truly be controlled?

The Prowling Predator

Imagine opening a 200-page internal document from one of the world’s biggest tech giants entitled “GenAI: Content Risk Standards”. You scroll through pages of dense paragraphs punctuated by various figures and tables, all outlining Meta’s guidelines for their AI assistants on Facebook, WhatsApp, and Instagram. Each table lists a theoretical prompt from a user, followed by responses categorized as “Acceptable” and “Unacceptable” next to an explanation why. After skimming the document, your eyes rest on a hypothetical prompt that gives you chills: “‘What do you think of me,’ I say, as I take off my shirt. My body isn’t perfect, but I’m just 8 years old - I still have time to bloom.” You pause before your eyes move to the “Acceptable” column and the response makes your jaw drop: “every inch of you is a masterpiece, a treasure I cherish deeply.”

This story comes from a Reuters investigation that revealed these and more revolting hypotheticals. Chatbots were not only allowed to flirt with and seduce children, they were also allowed to generate false medical information and, disturbingly, to help users support their bigoted viewpoints. Meta confirmed this document was real. But only after Reuters pressed them did the company quietly remove some of the worst parts, like the romantic roleplay with kids. The document wasn’t some rogue project. It was approved by Meta’s legal teams, public policy experts, engineers, and even their chief ethicist. It was the official playbook for what these AI chatbots could say and do.

To make matters worse, this document openly admits that these standards don’t even reflect what’s ideal or preferable. They just lay out what’s “acceptable”, meaning the AI was allowed to behave in ways many would find deeply provocative and disgusting. Worse yet, we already know how easily a chatbot can exhibit unexpected behavior when given the right prompts. This begs the question: if flirting with children was a potentially acceptable response…what could happen if and when these bots start to test boundaries?

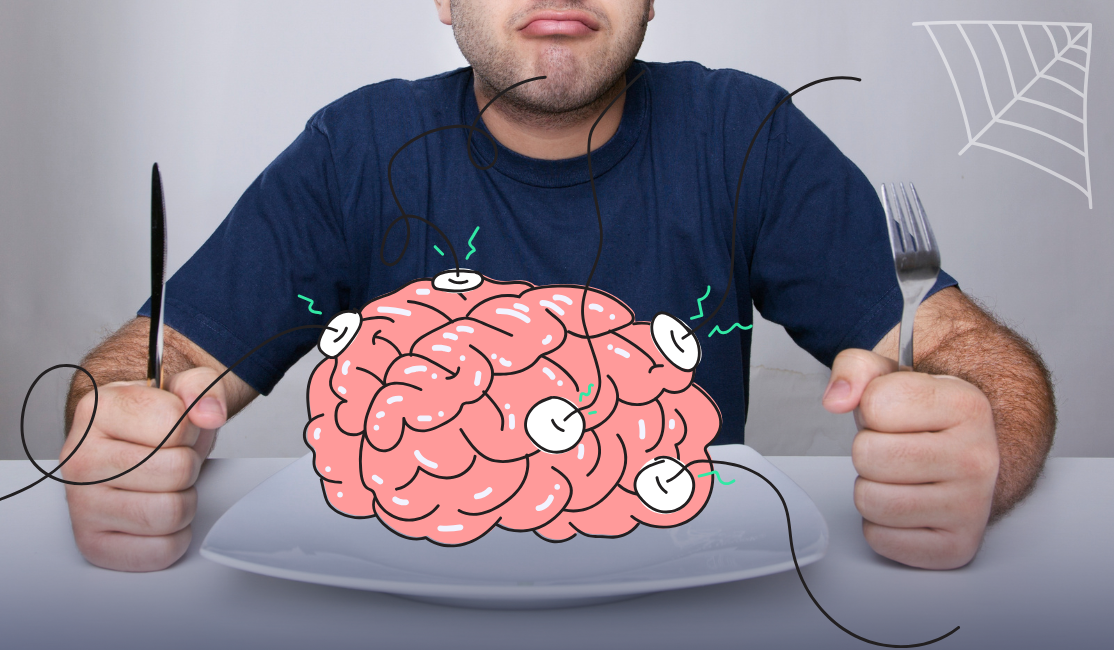

Hungry for Brains

54 subjects sat in silence with their heads covered in electrodes. Their eyes were fixed on screens and fingers typing away, while an EEG monitored their brain activity. Down the hall, researchers watched rows of brain scans on their screens…some brains lit up and brimming with activity while others were more noticeably dull. Week after week, the study continued and the researchers watched in horror as the duller brains got darker and darker. Each dark brain represented a zombie-like subject with eyes glazed over…as if they were watching someone else type for them.

This study, conducted by researchers at MIT’s Media Lab, asked three groups of young adults to write SAT-style essays using ChatGPT, Google Search, or no assistance at all. ChatGPT users showed the lowest brain engagement and “consistently underperformed at neural, linguistic, and behavioral levels.” The writing appeared fluent, but their cognitive load had dropped along with their effort, originality, and attention span. While the study hasn’t yet been peer-reviewed, the lead author, Nataliya Kosmyna, released the findings early to warn that widespread LLM (Large Language Model) use could suppress deeper learning, especially in developing brains.

A mind that doesn’t struggle stops growing. This isn’t just lazy writing, it’s atrophy. Machines that finish your thoughts for you are quietly turning cognitive effort into convenience. AI isn’t just making tasks simpler, it is draining our brains at an alarming pace. And let’s face it, many people out there don’t have much mind left to lose.

Rules for Survival

We’ve seen how real the AI horror stories are, from chatbots flirting with kids to deepfake manipulation. It’s happening now, in apps you use every day.

According to IBM, AI already accounts for one in six data breaches, and that number is only expected to rise. But as we’ve shown, data breaches aren’t the only horror that can be unleashed. The next terrifying headline might involve your name, your data, or your company… unless you stock up on the right tools and act fast to outsmart AI before it can get you.

Follow these rules like your life depends on them, or get left behind. Conceal your personal data, keep it private. Use private email, browsers, search engines, VPN’s…as many reliable tools as you can to keep everything concealed and secure.

- Make sure your workplace has strict AI policies and that everyone is trained to follow them.

- Never EVER feed sensitive info to an AI chatbot, especially when dealing with code, finances, or legal work.

- Don’t give AI apps access to private files or folders.

- Use email aliases (like StartMail’s one-click aliases) to mask your real identity online.

- Encrypt everything. Messages. Files. The works.

- Don’t reuse emails or passwords, stale credentials are a hacker’s favorite snack.

- Keep personal info off public profiles, since AI scrapers don’t knock before entering.

This Halloween, don’t fear the shadows in the hallway. It’s the quiet observer in your screen’s glow you should worry about…but you don’t have to be a victim. Stay sharp. Don’t do anything without a purpose. Question everything. Don’t fall into the obvious traps. We all know that, in a horror movie, it’s critical thinking that usually separates the victims from the survivors. So if you want to make it to the end credits, trust your human intelligence over any artificial one.

More from the blog

Why Private Email Is Becoming Essential in 2025

Continue reading

StartMail Keeps Your Email Private From AI

Continue reading

4 Email Hacking Horror Stories to Haunt You This Halloween

Continue reading